Proxem is a Paris-based text-analytics company — “basically, we convert your text into data” — whose solutions are applied for reputation management, human resources, and voice of the customer applications. The company’s natural language processing (NLP) technology applies machine learning for multi-lingual language analysis.

Back in November 2015, around the time of the LT-Accelerate language technology conference, I caught up with CEO François-Régis Chaumartin for a quick Q&A. Well, it was quick for me: 5 questions. François spoke at LT-Accelerate alongside Proxem client Claude Fauconnet from global energy giant TOTAL and graciously responded at length to my questions concerning application of text analytics for multi-lingual market understanding…

Seth Grimes> How does one derive market understanding from text-extracted information?

François-Régis Chaumartin> Several steps are crucial from sources identification to business insights. Do you want to identify your competitors on foreign markets? Discover game changers on emerging markets? Track the progress of a developing technology? More often than not you don’t even know where to look at, and if you do, you will likely miss crucial information in languages that you don’t speak.

Our tool Discovery helps users to discover sources that are relevant for their needs. The tool is based on an interaction loop between the human and the machine. With a broad request from the human, the machine will search documents related to the user’s query and analyze them. The analysis will detect sources, topics and terms that might interest the user, across several languages. The user will then choose the relevant documents, telling the machine to focus on those topics.

Once the relevant sources have been identified, a more in-depth analysis can take place, with named entity recognition (NER), correlation scores between topics or entities, time series analysis and so on… A clustering algorithm allows to efficiently filter masses of documents.

Seth> Multi-lingual challenges can be profound. What techniques do you apply, to facilitate analyses across languages?

François> Analyzing documents in several languages is challenging because traditional NLP approaches necessitate as many experts as there are languages you want to process. We massively invested in Machine Learning to reduce the amount of manpower needed.

Traditionally, linguists would handcraft features considered useful for a given ML task. For example if you wanted to detect brands in a text, you would use a dictionary of brands created semi-manually. You would also try to detect differences between a misspelling and unknown brand. You would create some features based on rules and a machine learning algorithm would learn which features are the most useful. In a sentence like “the last Foo phone is amazing,” Foo is likely to be a brand. So the linguist would try to create features for this kind of sentence. This task requires long hours of trial and errors, of exploring the data, of spotting unrecognized brands and adding new rules to create new features helping to cover every case. And all of this efforts had to be multiplied by the number of domains and the number of languages.

François, you’ve shared thought with me about deep learning in particular, applied to natural language…

What’s new with Deep Learning? Now, the machine learns both how to exploit the features and how to create the relevant features from raw text. Deep Learning algorithms make us less dependent on human expertise to adapt from one domain to another. All they need are vast amounts of data that are becoming easier to find and CPU/GPU time that is getting cheaper. Now Deep Learning algorithms detain state of the art results in most of NLP tasks in English thanks to the massive amount of labeled data available in this language.

At Proxem we are particularly interested in handling several languages and not just English, so one of our main focus is how to adapt a Deep Learning model trained for English to another language with fewer labeled data available. Our approach relies on other Deep Learning techniques that leverage the important volumes of raw text we can find for most of the languages on the Internet. We published a paper at EMNLP 2015 on this subject called Trans-gram: Fast Cross-Lingual Embeddings.

How do you work with concepts, expressions, and idiom that may not translate easily from one language to another?

Our privileged approach is to analyze documents in their original languages, rather than translate them. We extract linguistic clues that we project into language-agnostic concepts and organize them in business oriented taxonomies. We only translate documents when users want to see the entire contents in their own language. This approach prevents the loss of information during the analysis process. To align the high-level concepts, we use Wikipedia in multiple languages and other multi-lingual aligned corpora that give translations at a concept level. Using the various localized Wikipedias assures a good coverage of languages.

The analysis in several languages often brings unexpected insights of what is happening in other countries or other cultures. For instance one of our customers designing and selling sportswear discovered that Chinese people find the sleeves of their sweaters too long, a phenomenon that is not mentioned in European sources.

Finally, what technical and business challenges are you working to solve next?

Our goal ultimately is to create a web and text mining application that a business end user could easily configure and use. We are putting a lot of effort in streamlining the process of discovering sources and creating custom analyzers on-the-fly so that users can focus on what matters to them: using the analysis results to learn insights on their particular concerns.

From an R&D perspective, we are working hard, using deep learning, to improve sentiment analysis, NER, and classification to the point where their results will be good enough to be used on real-life needs.

An extra —

To get the viewer point of view, I asked Claude Fauconnet — again, a Proxem client, who spoke alongside François at last November’s LT-Accelerate conference — what TOTAL values most in Proxem as a solution provider, why TOTAL chose Proxem. Claude’s response:

- Ability to perform unguided Web exploration, which involves a lot of heuristics and gives very interesting results.

- Proxem’s approach complements traditional monitoring tools.

- Confidence in Proxem’s scientific foundations.

- Proxem’s DNA is R&D, with high applicability to both our innovation mission and real business needs.

My thanks to the two gentlemen for responding to my questions. Please visit the LT-Accelerate site to view their November, 2015 presentation deck.

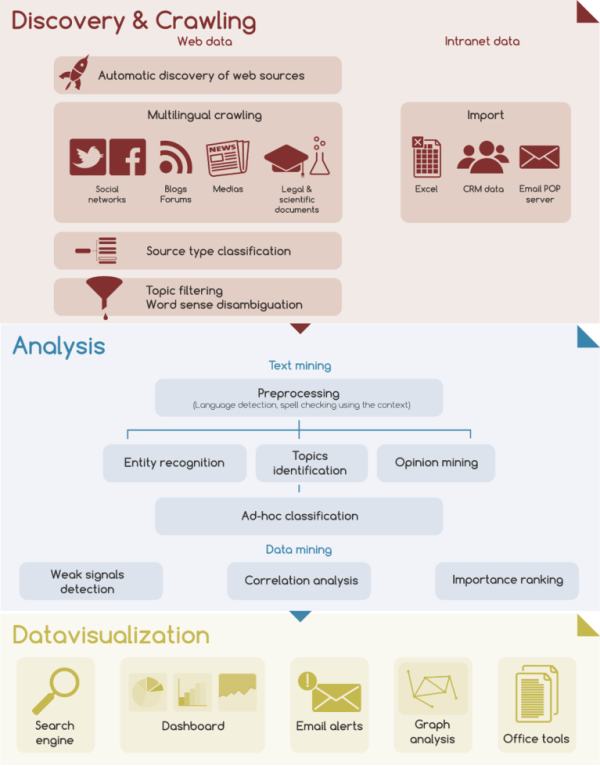

Proxem’s semantic analysis method is diagrammed below.

It progresses from corpus to document annotation, via language detection, contextual spelling correction, named entity extraction and then relation / sentiment / classification / relevancy; then clustering and then visualization and reporting: